Hi,

Really would appreciate any help or suggestions. I have 50GB file that I need to duplicate and move to a different folder. When I try to duplicate the larger file it fails with a “Unhandled error”. Duplicate works just fine with small files.

Jupyterhub: 0.9.2

Amazon Linux: 2 (Karoo)

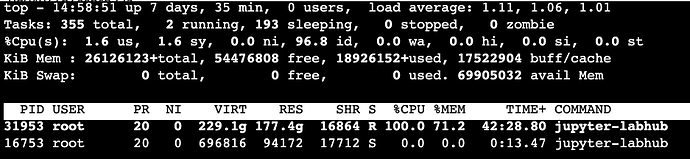

r5.8xlarge

Here is the debug log.

[I 2022-06-23 16:57:00.293 SingleUserLabApp handlers:134] Copying **path/to/big/file/**bigfile.sas7bdat to path/to/big/file/

[I 2022-06-23 16:58:04.076 JupyterHub log:158] 302 GET / → /hub (@::ffff:10.10.28.26) 0.66ms

[I 2022-06-23 16:58:04.115 JupyterHub log:158] 302 GET /hub → /hub/ (@::ffff:10.10.28.26) 0.55ms

[I 2022-06-23 16:58:04.175 JupyterHub log:158] 302 GET /hub/ → /hub/login (@::ffff:10.10.28.26) 0.56ms

[I 2022-06-23 16:58:04.236 JupyterHub log:158] 200 GET /hub/login (@::ffff:10.10.28.26) 1.14ms

[D 2022-06-23 17:00:59.847 JupyterHub proxy:678] Proxy: Fetching GET http://127.0.0.1:8001/api/routes

17:00:59.848 [ConfigProxy] info: 200 GET /api/routes

[E 2022-06-23 17:09:25.692 SingleUserLabApp web:1793] Uncaught exception POST path/to/big/file/?1656003420351 (::ffff:10.10.28.82)

HTTPServerRequest(protocol=‘http’, host=‘workspace.in.aws:8192’, method=‘POST’, uri=‘path/to/big/file/?1656003420351’, version=‘HTTP/1.1’, remote_ip=‘::ffff:10.10.28.82’)

Traceback (most recent call last):

File “/usr/local/lib64/python3.7/site-packages/tornado/web.py”, line 1704, in _execute

result = await result

File “/usr/local/lib64/python3.7/site-packages/tornado/gen.py”, line 769, in run

yielded = self.gen.throw(*exc_info) # type: ignore

File “/usr/local/lib/python3.7/site-packages/notebook/services/contents/handlers.py”, line 200, in post

yield self._copy(copy_from, path)

File “/usr/local/lib64/python3.7/site-packages/tornado/gen.py”, line 762, in run

value = future.result()

File “/usr/local/lib64/python3.7/site-packages/tornado/gen.py”, line 234, in wrapper

yielded = ctx_run(next, result)

File “/usr/local/lib/python3.7/site-packages/notebook/services/contents/handlers.py”, line 136, in _copy

model = yield maybe_future(self.contents_manager.copy(copy_from, copy_to))

File “/usr/local/lib/python3.7/site-packages/notebook/services/contents/manager.py”, line 446, in copy

model = self.get(path)

File “/usr/local/lib/python3.7/site-packages/notebook/services/contents/filemanager.py”, line 434, in get

model = self._file_model(path, content=content, format=format)

File “/usr/local/lib/python3.7/site-packages/notebook/services/contents/filemanager.py”, line 361, in _file_model

content, format = self._read_file(os_path, format)

File “/usr/local/lib/python3.7/site-packages/notebook/services/contents/fileio.py”, line 320, in _read_file

return encodebytes(bcontent).decode(‘ascii’), ‘base64’

File “/usr/lib64/python3.7/base64.py”, line 532, in encodebytes

return b"".join(pieces)

MemoryError

[W 2022-06-23 17:09:25.700 SingleUserLabApp handlers:608] Unhandled error

Has anybody seen this type of error?

Is there a limit to the size of a file that can be duplicated?

Thanks in advance.

Abe