The original MyBinder service included an optional PostgreSQL service running inside a MyBinder container, and as a New Year resolution I thought I’d have a look at some simple demos for running arbitrary services, such as Postgres, inside a Binder container.

The jupyter-server-proxy allows you to start a service from a Jupyter notebook homepage New menu, so that’s one possibility: create a menu option to start a service and then connect to it. But I’m looking more for a recipe for creating auto-starting/free running services.

An alternative approach is to start a service from the repo2docker start config file. I’ve popped up a minimal example here for autostarting a headless OpenRefine service in a MyBinder repo and connecting to it using an OpenRefine python client in a notebook.

I’ve spent much of the afternoon engaged in false starts, mainly (I suspect) because my knowledge of how all the pieces fit together is a bit ropey, and I haven’t been keeping up with reading the docs.

So here are some things I’ve been flummoxed by - pointers would be appreciated:

-

installing Linux apt packages from non-standard apt repositories: my first thought was that rather than trying to figure out how to get umpteen different services running, if I could get one running via a docker container running inside a Binder container, that would generalise to every other dockerised application by using an appropriate docker run command inside the start file. BUT, installing apt-get install -y docker-ce seems to require adding an additional apt repository so I wonder how to do that? Trying it in postBuild doesn’t seem to work? So my attempt at docker generality falls at the first hurdle.

-

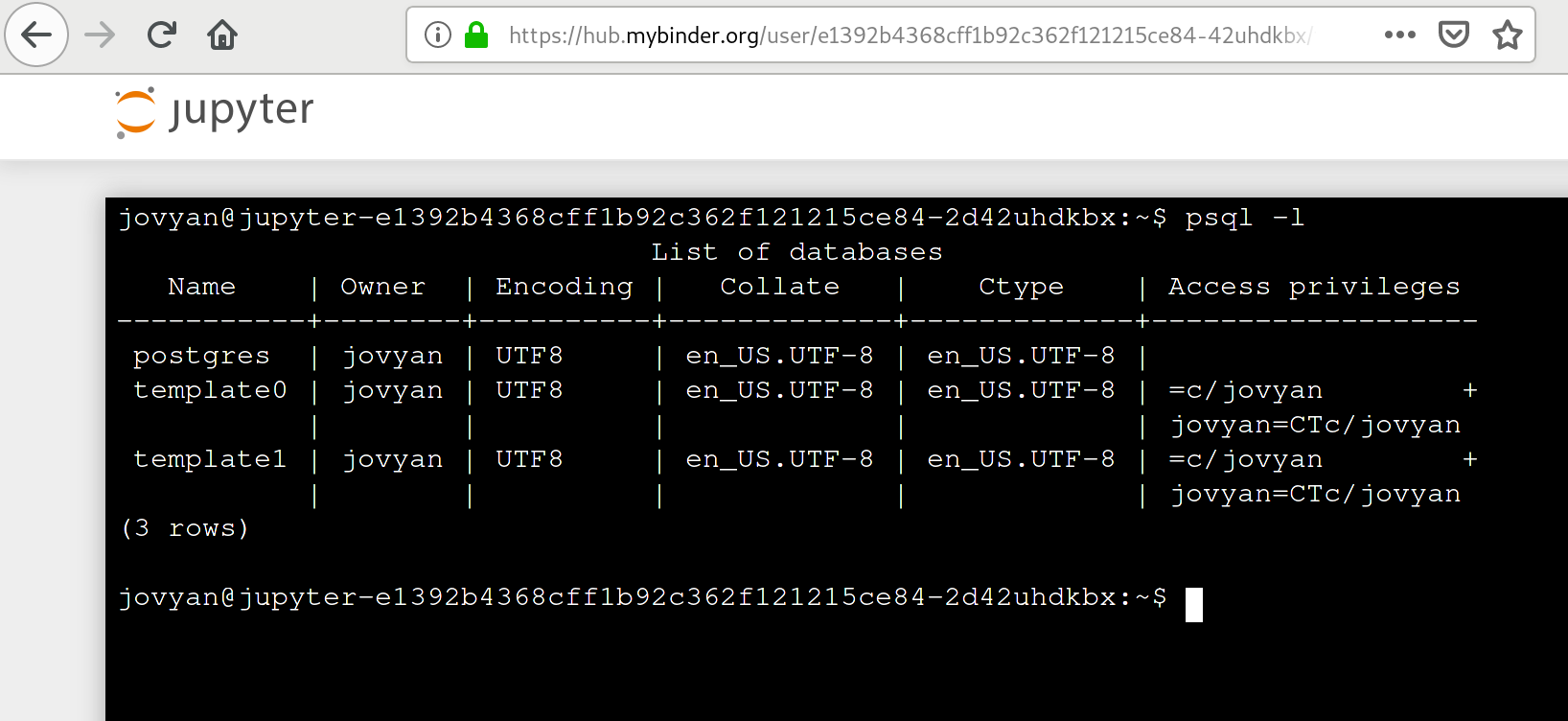

installing a service that is packaged to run under a particular user account: my thought here was if I could get a service running that runs under a particular user different to the jovyan/default user, it may generalise to working with other, similarly distributed applications. I’ve gone round the houses a few times trying to get postgresql installed in this way but with no success. For example, setting a start file to something like:

#!/bin/bash

export PGUSER=$NB_USER

nohup /usr/lib/postgresql/10/bin/pg_ctl -D /var/lib/postgresql/10/main -l logfile start > /dev/null 2>&1 &#Do the normal Binder start thing here…

exec “$@”

which I thought would try to run the service under a default jovyan, rather than postgres, user, doesn’t seem to have much success; running that command explicitly in a terminal throws an error: pg_ctl: could not open PID file “/var/lib/postgresql/10/main/postmaster.pid”: Permission denied ?

In addition, trying to do things like:

sudo su - postgres <<-EOF

psql -f ${HOME}/db/config.sql

EOF

fails without access to sudo, su, etc etc… But then again, seeding default directories is not really relevant if the PostgreSQL server doesn’t even start.

One other thing I wondered (but haven’t tried yet) was whether running repo2docker locally with user postgres might help in this case, although that doesn’t generalise to running things on MyBinder, where the user is defaulted to something else (typically, jovyan, I think?).