Hi I’m Jennifer and I’m having trouble with getting Hive data using scala kernel.

I’m testing exact same code with same hive-site.xml(hive config file) on both spark-shell and jupyterlab spylon-kernel

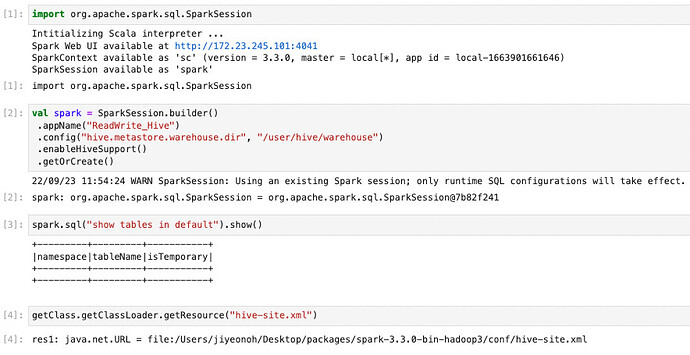

Here’s my jupyterlab code:

Here’s my spark-shell code:

scala> import org.apache.spark.sql.SparkSession

import org.apache.spark.sql.SparkSession

scala> val sparkSession = SparkSession.builder().appName("ReadWrite_Hive").config("hive.metastore.warehouse.dir", "/user/hive/warehouse").enableHiveSupport().getOrCreate()

22/09/23 11:47:55 WARN SparkSession: Using an existing Spark session; only runtime SQL configurations will take effect.

sparkSession: org.apache.spark.sql.SparkSession = org.apache.spark.sql.SparkSession@186f51bb

scala> sparkSession.sql("show tables in default").show()

+---------+---------+-----------+

|namespace|tableName|isTemporary|

+---------+---------+-----------+

| default| t1| false|

| default|user_data| false|

+---------+---------+-----------+

scala> getClass.getClassLoader.getResource("hive-site.xml")

res1: java.net.URL = file:/Users/jiyeonoh/Desktop/packages/spark-3.3.0-bin-hadoop3/conf/hive-site.xml

There weren’t many references, and the ones that I’ve tried are:

- (Optional) Configuring Spark for Hive Access - Hortonworks Data Platform

- https://groups.google.com/g/cloud-dataproc-discuss/c/O5wKJDW9kNQ

There’s no hive or spark related logs on JupyterLab. Here’s the logs

[W 2022-09-23 09:47:49.887 LabApp] Could not determine jupyterlab build status without nodejs

[I 2022-09-23 09:47:50.393 ServerApp] Kernel started: afa4234d-48ac-4505-b6a0-e3fa220161cd

[I 2022-09-23 09:47:50.404 ServerApp] Kernel started: 9404ff88-622f-4ba8-86b1-404d648588fc

[MetaKernelApp] ERROR | No such comm target registered: jupyter.widget.control

[MetaKernelApp] WARNING | No such comm: 94bab30b-35b1-48bf-bb51-6000d46df671

[MetaKernelApp] ERROR | No such comm target registered: jupyter.widget.control

[MetaKernelApp] WARNING | No such comm: 936a8763-707c-4076-8471-7ceed85ccb53

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

[I 2022-09-23 09:57:52.541 ServerApp] Saving file at /scala-spark/Untitled.ipynb

[I 2022-09-23 09:59:52.580 ServerApp] Saving file at /scala-spark/Untitled.ipynb

[I 2022-09-23 10:21:55.527 ServerApp] Kernel restarted: 9404ff88-622f-4ba8-86b1-404d648588fc

[MetaKernelApp] ERROR | No such comm target registered: jupyter.widget.control

[MetaKernelApp] WARNING | No such comm: 6eb29ba4-7dab-4314-ace4-88488935840b

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

I’ve also tried this on Jupyter Notebook, removed the kernel and reinstalled it but it was the same ![]()

What could I’ve been missing?

Where should I check? Please help.

Thanks

Jennifer