Dockerfile for above image is mentioned below:

From jupyter/all-spark-notebook:2343e33dec46

ENV HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop

RUN rm -rf /opt/hadoop/ &&http://archive.apache.org/dist/hadoop/common/hadoop-3.1.2/hadoop-3.1.2.tar.gz > /dev/null 2>&1 &&

RUN rm -rf /opt/spark/ && http://archive.apache.org/dist/spark/spark-2.4.3/spark-2.4.3-bin-without-hadoop.tgz > /dev/null 2>&1 &&

ENV SPARK_DIST_CLASSPATH=/opt/hadoop/etc/hadoop:/opt/hadoop/share/hadoop/common/lib/:/opt/hadoop/share/hadoop/common/ :/opt/hadoop/share/hadoop/hdfs:/opt/hadoop/share/hadoop/hdfs/lib/:/opt/hadoop/share/hadoop/hdfs/ :/opt/hadoop/share/hadoop/mapreduce/lib/:/opt/hadoop/share/hadoop/mapreduce/ :/opt/hadoop/share/hadoop/yarn:/opt/hadoop/share/hadoop/yarn/lib/:/opt/hadoop/share/hadoop/yarn/

ENV SPARK_HOME=/usr/local/spark

RUN pip3 install boto3

RUN cd /usr/local/spark/jars/ &&

RUN pip3 install wheel#COPY entrypoint.sh entrypoint.sh#RUN chmod -R 777 entrypoint.sh

RUN pip3 install --no-cache-dir -r requirements.txt

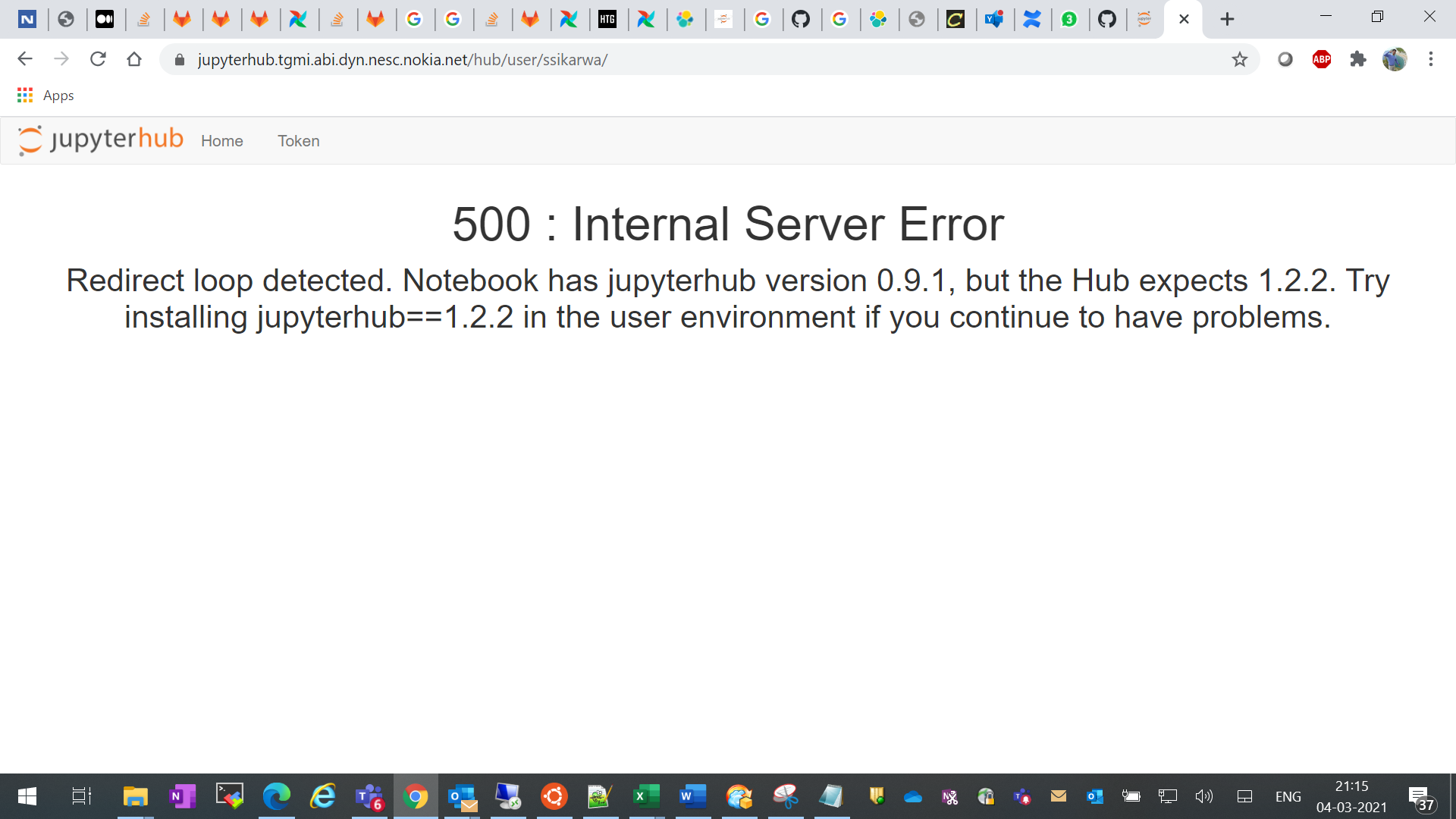

manics

March 6, 2021, 7:10pm

3

Have you tried the suggestion from the error message? jupyter/all-spark-notebook:2343e33dec46 is a very old image, can you use a more recent one?

Thanks for the reply @manics : Mistake was mine – I was connecting by ingress to hub service instead of proxy-public.

It worked after that.