Here’s the response from AWS support, in short, they suggest that a shared folder can be achieved by setting up persistence, its then possible to use the persistent memory in s3 as a shared memory space.

They are still looking into a local shared space. Personally, I think would be preferable as this would allow me to leverage the user and group controls afforded by jupyterHub.

AWS support response:

I understand you running JupyterHub through AWS EMR and need create some shared folders for the groups. Please correct me If I’ve misunderstood.

I first check the link you provided, according their code, they achieve this by creating a directory that accessible to all group users.

++++++++++++

https://github.com/kafonek/tljh-shared-directory/blob/8f82b4338e60808c05cfc0798fe1929c3b9c6e6b/tljh_shared_directory.py

++++++++++++

The first thing we need ensure is if you storing the notebooks in s3 or from local directory in notebook.

User notebooks and files are saved to the file system on the master node. This is ephemeral storage that does not persist through cluster termination. So we can storage these files to s3 by configuring persistence for notebooks in your cluster with jupyter[1].

If you store files in s3, what we can do is to create shared folder in s3 under “jupyter” folder or any other s3 bucket and use this location to keep the shared files. For example, you can run the below command to copy a notebook from shared location to devuser1’s home directory:

++++++++++

aws s3 cp s3://mybucket/jupyter/shared/note.ipynb

s3://mybucket/jupyter/devuser1/

++++++++++

Please note that the IAM role attached to master instance group is used by the jupyterhub users for accessing these s3 objects. It is recommended to allow only read and write permissions to s3 buckets. Please refer [2] under references for more information.

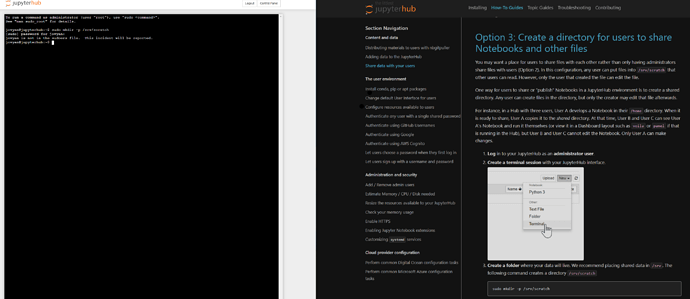

If you save data from local directory and you want to create shared folder in locally, according the steps from TLJH, they create a shared folder to allow all the users to use. I am not familiar with this, so please allow some time for testing this approach.

References:

[1] Configuring persistence for notebooks in Amazon S3 https://docs.aws.amazon.com/emr/latest/ReleaseGuide/emr-jupyterhub-s3.html

[2] Configure roles in EMR cluster https://docs.aws.amazon.com/emr/latest/ManagementGuide/emr-iam-roles.html

As a side question, any ideas regarding how to execute root commands in jupyterhub AWS EMR? I wanted to attempt to create the shared folder locally as I did with TLJH but it looks like the admin doesn’t have the permissions to do so and I’m unsure how to access the root account.